Implementation of Big AI Models for Wireless Networks with Collaborative Edge Computing

Liekang Zeng,

Shengyuan Ye,

Xu Chen,

Yang Yang

April 2024

Abstract

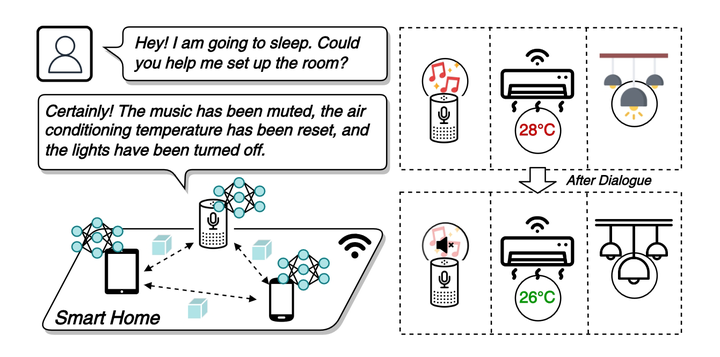

Big Artificial Intelligence (AI) models have emerged as a crucial element in various intelligent applications at the edge, such as voice assistants in smart homes and autonomous robotics in smart factories. Training big AI models, e.g., for personalized fine-tuning and continual model refinement, poses significant challenges to edge devices due to the inherent conflict between limited computing resources and intensive workload associated with training. Despite the constraints of on-device training, traditional approaches usually resort to aggregating training data and sending it to a remote cloud for centralized training. Nevertheless, this approach is neither sustainable, which strains long-range backhaul transmission and energy-consuming datacenters, nor safely private, which shares users raw data with remote infrastructures. To address these challenges, we alternatively observe that prevalent edge environments usually contain a diverse collection of trusted edge devices with untapped idle resources, which can be leveraged for edge training acceleration. Motivated by this, in this article, we propose collaborative edge training, a novel training mechanism that orchestrates a group of trusted edge devices as a resource pool for expedited, sustainable big AI model training at the edge. As an initial step, we present a comprehensive framework for building collaborative edge training systems and analyze in-depth its merits and sustainable scheduling choices following its workflow. To further investigate the impact of its parallelism design, we empirically study a case of four typical parallelisms from the perspective of energy demand with realistic testbeds. Finally, we discuss open challenges for sustainable collaborative edge training to point to future directions of edge-centric big AI model training.

Publication

In IEEE Wireless Communications (IEEE WCM), 2024. 中科院一区(Top), Impact Factor=12.9.

Liekang Zeng

Ph.D., SMCLab, Sun Yat-sen University

He obtained Ph.D. degree at School of Computer Science and Engineering, Sun Yat-sen University. His research interest lies in building edge intelligence systems with real-time responsiveness, systematic resource efficiency, and theoretical performance guarantee.

Shengyuan Ye

Ph.D. student at SMCLab

He is a Ph.D. student at School of Computer Science and Engineering, Sun Yat-sen University. His research interests include Resource-efficient AI Systems and Applications with Mobile AI.

Xu Chen

Professor and Assistant Dean, Sun Yat-sen University

Director, Institute of Advanced Networking & Computing Systems

Xu Chen is a Full Professor with Sun Yat-sen University, Director of Institute of Advanced Networking and Computing Systems (IANCS), and the Vice Director of National Engineering Research Laboratory of Digital Homes. His research interest includes edge computing and cloud computing, federated learning, cloud-native intelligent robots, distributed artificial intelligence, intelligent big data analysis, and computing power network.

Yang Yang

Professor, IEEE Fellow, HKUST(GZ)

Vice President (Teaching & Learning)

Dr. Yang Yang is currently the Associate Vice-President for Teaching and Learning, the Acting Dean of College of Education Sciences, and a Professor with the IoT Thrust at the Hong Kong University of Science and Technology (Guangzhou). Yang’s research interests include IoT technologies and applications, multi-tier computing networks, 5G/6G mobile communications, intelligent and customized services, and advanced wireless testbeds. He has published more than 300 papers and filed more than 120 technical patents in these research areas.

Overview

Overview