Asteroid: Resource-Efficient Hybrid Pipeline Parallelism for Collaborative DNN Training on Heterogeneous Edge Devices

Shengyuan Ye,

Liekang Zeng,

Xiaowen Chu,

Guoliang Xing,

Xu Chen

November 2023

Abstract

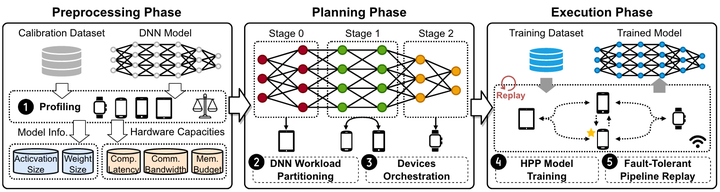

On-device Deep Neural Network (DNN) training has been recognized as crucial for privacy-preserving machine learning at the edge. However, the intensive training workload and limited onboard computing resources pose significant challenges to the availability and efficiency of model training. While existing works address these challenges through native resource management optimization, we instead leverage our observation that edge environments usually comprise a rich set of accompanying trusted edge devices with idle resources beyond a single terminal. We propose Asteroid, a distributed edge training system that breaks the resource walls across heterogeneous edge devices for efficient model training acceleration. Asteroid introduces a novel hybrid pipeline parallelism to orchestrate distributed training, along with a judicious parallelism optimization for maximizing throughput under certain resource constraints. Furthermore, a fault-tolerant yet lightweight pipeline replay mechanism is further developed to tame the device-level dynamics for training robustness and performance stability. We implement Asteroid on heterogeneous edge devices with both vision and language models, demonstrating up to 12.2× faster training than conventional parallelism methods and 2.1× faster than state-of-the-art hybrid parallelism methods through evaluations. Furthermore, Asteroid can recover training pipeline 14× faster than baseline methods while preserving comparable throughput despite unexpected device exiting and failure.

Publication

In Annual International Conference On Mobile Computing And Networking (MobiCom), Washington, USA, 30 Sept. - 4 Oct. 2024, CCF-A, Top #1 conference in Mobile Computing and Networks. Acceptance rate = 20.8% (103/494).

Shengyuan Ye

Ph.D. student at SMCLab

He is a Ph.D. student at School of Computer Science and Engineering, Sun Yat-sen University. His research interests include Resource-efficient AI Systems and Applications with Mobile AI.

Liekang Zeng

Ph.D., Sun Yat-sen University

He obtained Ph.D. degree at School of Computer Science and Engineering, Sun Yat-sen University. His research interest lies in building edge intelligence systems with real-time responsiveness, systematic resource efficiency, and theoretical performance guarantee.

Xiaowen Chu

Professor, HKUST(GZ)

Acting Head, Data Science and Analytics Thrust

Dr. Chu is currently a Professor at the Data Science and Analytics Thrust, Information Hub of HKUST(GZ), and an Affiliate Professor in the Department of Computer Science and Engineering, HKUST. His current research interests include GPU Computing, Distributed Machine Learning, Cloud Computing, and Wireless Networks. He is especially interested in the modelling, parallel algorithm design, application optimization, and energy efficiency of GPU computing.

Guoliang Xing

Professor, IEEE Fellow, CUHK

Director, CUHK AIoT Lab

Professor Xing’s research lies at the intersection between systems, embedded AI, algorithms, and physical domains including health, autonomous driving, and environment. His research group develops new technologies at the frontier of mobile health, autonomous driving, Cyber-Physical Systems (CPS), Internet of Things (IoT), wireless networks, security and privacy.

Xu Chen

Professor and Assistant Dean, Sun Yat-sen University

Director, Institute of Advanced Networking & Computing Systems

Xu Chen is a Full Professor with Sun Yat-sen University, Director of Institute of Advanced Networking and Computing Systems (IANCS), and the Vice Director of National Engineering Research Laboratory of Digital Homes. His research interest includes edge computing and cloud computing, federated learning, cloud-native intelligent robots, distributed artificial intelligence, intelligent big data analysis, and computing power network.

Asteroid Overview

Asteroid Overview